In my previous blog, I outlined a distributed computing system which I’d developed to run our Radar acoustic analysis software across multiple servers concurrently, reducing overall processing time by sharing the load. Since then, Radar has got even faster thanks to a further development to fully support multi-core processing. In this blog post, I’ll explain what this means, outline the motivation and objectives for the development and share some testing results which demonstrate what a huge step up in performance it has achieved. And don’t worry – I’ll skip over the implementation details this time for an easier read!

Speeding up Radar with Multi-Core Processing

Introduction

Motivation

Radar is SME Water’s acoustic noise logging analysis suite. By combining water network data from GIS with deployment and fleet status data from logger suppliers, we’re able to offer our clients insights including:

- Maximum achievable acoustic coverage

- Optimised logger deployment plans

- Fixed network logging performance analysis

- “Lift and shift” logger deployment performance analysis

To produce these insights, Radar uses geospatial analysis algorithms developed in-house. The optimiser does some especially heavy lifting to maximise acoustic noise logging coverage in each DMA with the fewest number of loggers possible. This area is the focus of my efforts to reduce run time, to ensure Radar’s analysis can continue to keep up with demand as more water companies come on board and as its outputs are increasingly used to inform day-to-day operational decisions.

Earlier work (as outlined in my previous blog post) reduced run time by sharing analysis from an input list of DMAs across multiple “worker” computers. An example of this being used in Radar optimiser analysis of a water company’s entire distribution network is shown in Figure 1. In this timeline plot, time runs along the x-axis, with each row representing one of three computers working in parallel. Each coloured bar represents the optimisation analysis for one DMA in the input list.

Figure 1 – Radar optimiser timeline plot (before multi-core support)

This approach yielded significant run time reduction, but it soon became clear when profiling the Radar optimiser’s system resource usage that performance might be further improved by also leveraging multi-core processing to analyse multiple DMAs on each server computer concurrently. Having recently built a new server computer dedicated to running Radar analysis, it was time for some software design and implementation work to ensure that we were making the most of the new hardware.

Aims & Objectives

The objectives for the development were clear:

- Update the Radar software architecture to support concurrent analysis of multiple DMAs on each server computer

- Verify that analysis outputs from the new software version are correct and identical to the earlier version

- Evaluate the relationship between analysis run time and the number of concurrent worker sessions on each server to quantify the speed up and identify optimal parameters

Performance Comparison

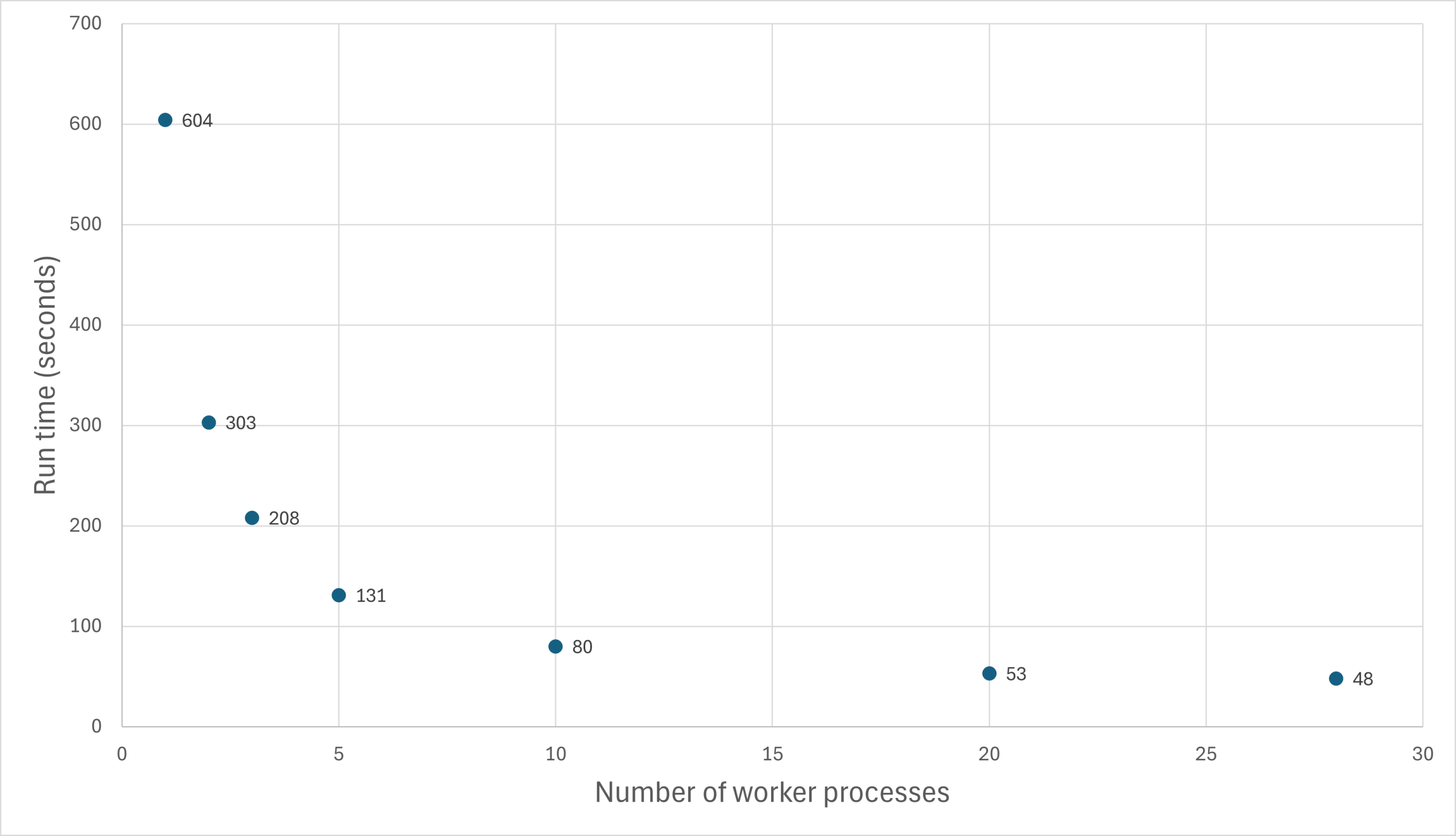

After implementing the software changes and ensuring analysis outputs were correct, a test dataset of 76 real DMAs was used to evaluate optimiser run time. The total run time to complete analysis of all test DMAs was recorded for a single session per worker, then 2 sessions, 3 sessions and so on, performing a sweep which increasingly utilised multi-core processing until diminishing returns were reached. The results for the new dedicated Radar server are plotted in Figure 2, where each data point is annotated with its run time (y-axis value).

Figure 2 – Optimiser performance profile with multi-core processing (Radar server)

While the new Radar server has 28 CPU cores, the plot illustrates how the achieved reduction in run time was not linear (i.e., analysing with 28 sessions was not 28 times faster than with a single session). More research would be required to fully understand this, but a non-linear relationship was expected. One reason for this is that the optimiser analysis is not completely CPU-dependent, also performing heavy input/output (I/O) operations at times. In other words, drive access speed may be one factor affecting the performance gains.

Nevertheless, achieving more than 10 times speed up is cause for celebration!

Use in Production

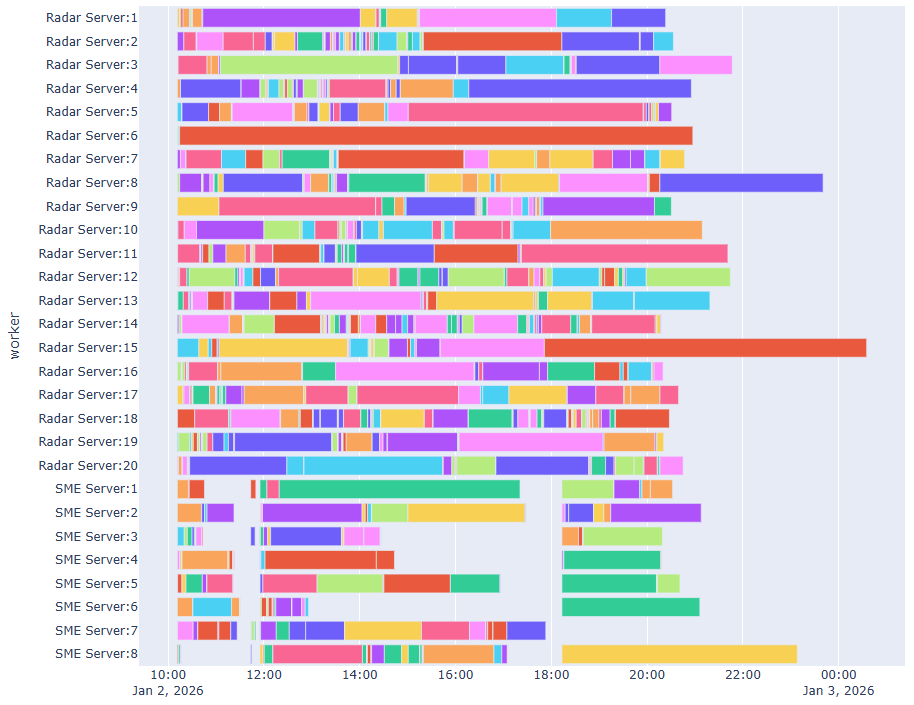

Behind the scenes, I’ve also been hard at work making improvements to smooth out our process for releasing new Radar software versions. This paid off as, after completing testing, I was able to get multi-core support released quickly and easily into the production environments for our existing Radar clients. Figure 3 shows a timeline for analysing the same set of DMAs as in Figure 1. Notice how, despite some intermittent connection issues on one server (gaps in the timeline), the full company network optimisation now finishes in a matter of hours rather than several days! The analysis was conducted across two server computers, with the new Radar server using 20 worker sessions and the other using 8, based on the performance profiling done during testing. Each row in the plot now represents a (numbered) worker session, rather than a separate computer.

Figure 3 – Radar optimiser timeline plot (with multi-core support)

Besides the connection issues, it’s also clear from the timeline plot that some larger, more complex DMAs take much longer to analyse than other simpler areas. This is because the calculations the optimiser must perform become increasingly complex as the solution progresses. Looking at this plot, it occurred to me that sorting the input list so that the most complex areas are always allocated for processing early in the run would be beneficial. This could help prevent such situations where most worker sessions are idle while a few continue to work on stubborn areas and could have reduced the overall run time by several hours in this case.

Final Thoughts

As I continue to improve my computer science and software skills, I’m finding it highly rewarding to apply myself to making Radar a successful product. Since my last blog, I’ve been able to set up a dedicated Radar analysis server, streamline our software release process and reduce the optimiser analysis run time by a factor of ten! With further developments on the horizon and new ideas joining the backlog all the time, there’s plenty of work still to be done, but I think it’s safe to say that 2025 has been a successful year.

As an engineer turned software developer, I’m thankful for the opportunity I have at SME Water to combine and expand my skills in these areas while breaking new ground in the industry. I look forward to the challenges 2026 will bring, and the lessons I’ll learn along the way.